For many of our customers, metaphactory is a key component of their data landscape: their knowledge graph ties together various data silos, provides a semantic access layer based on semantic models, and becomes one of the key systems to support decisions and processes from research to sales.

Keeping all involved systems up and running is a big task that requires many different skills. Besides the operational perspective of working with infrastructure, development, and deployment processes, security plays a growing part in this story.

This became apparent once more at the beginning of this year with the big splash made by the Log4Shell vulnerability in the widely used log4j software library. As many other software vendors, metaphacts was quick to provide updates of metaphactory with fixed versions of that dependency. Ensuring that any known vulnerabilities are discovered and mitigated quickly to avoid any risk for our customers' security and data safety is an important goal for us.

This requires a resilient and especially secure setup with a reliable operations model and tight interaction with relevant infrastructure, development and deployment processes. metaphactory provides a number of features to make it a well-behaving and secure part in today's complex software deployments.

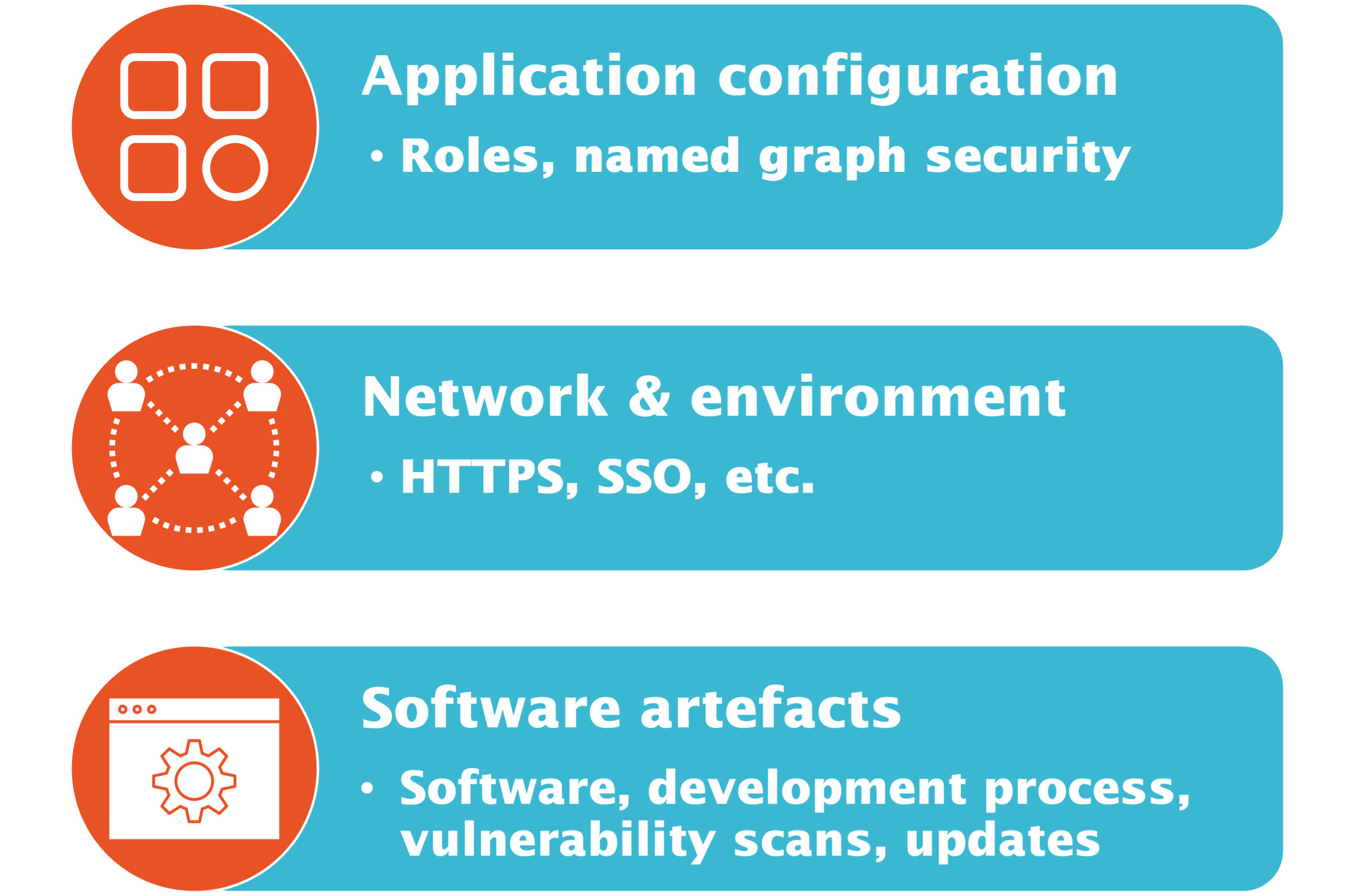

Security consists of different aspects which are layered on top of each other as shown in the following diagram:

This blog post gives a quick overview of the overall picture and dives deeper into ensuring a secure network and environment which provides the foundation for any application deployment in an enterprise IT landscape.

Good Citizen of the Enterprise Network

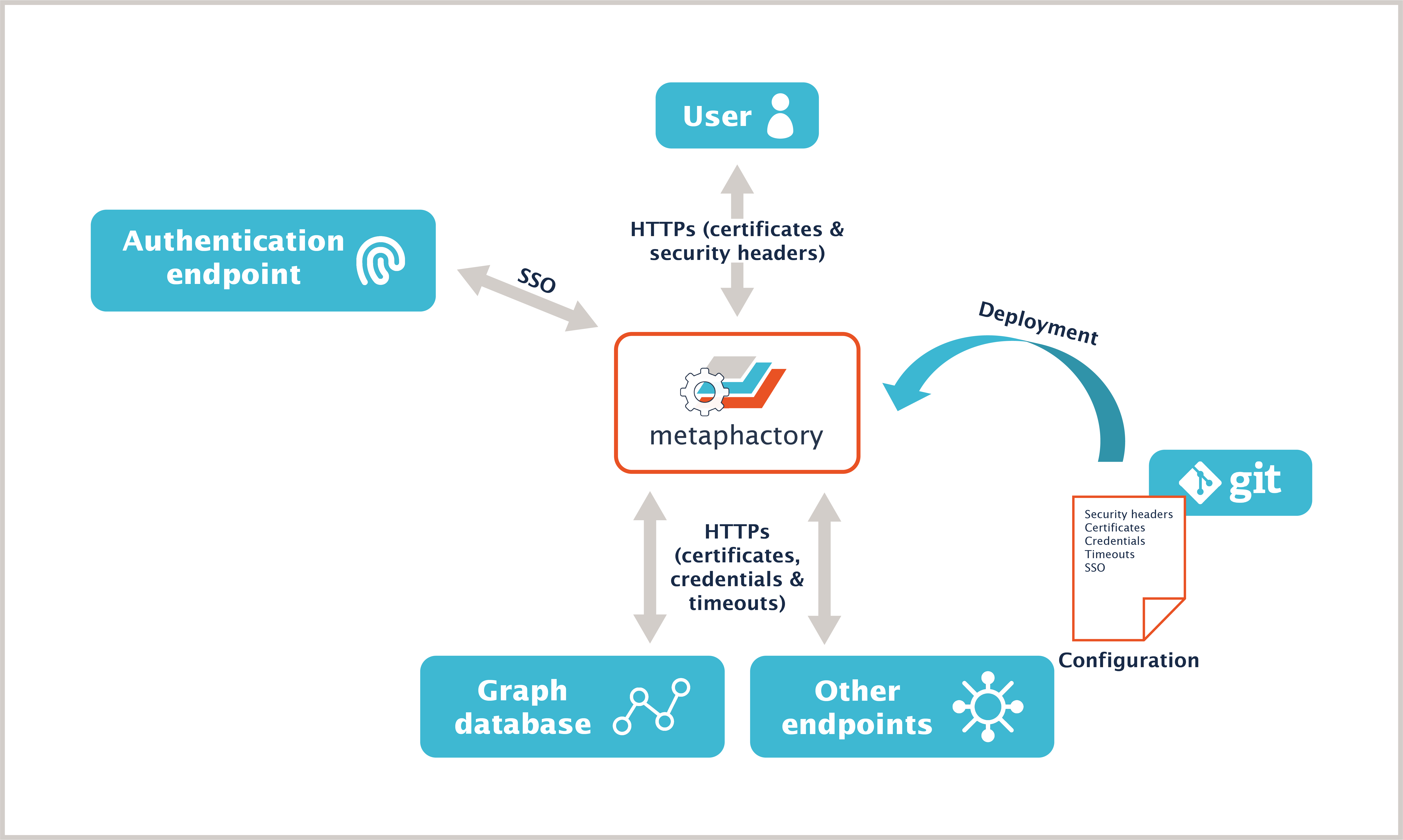

metaphactory is not an isolated application but integrates into an enterprise network, as depicted in the graphic below. As a well-behaved citizen in that environment, it adheres to best practices and uses well-established mechanisms and procedures when interacting with other systems in the environment.

The following sections discuss the topics of:

- Mechanism for secure end-user access to metaphactory

- Integration with enterprise-wide authentication (single sign-on, SSO)

- Configuration Management with Devops and Gitops

- Integration with databases and other integrated systems

Secure end-user network access to metaphactory

Nowadays, nearly all websites and services externally run on a secure networking layer (e.g., HTTPS for websites). metaphactory supports HTTPS out of the box to allow secure access even without further setup. This also applies to our metaphactory AMIs, which come with pre-configured, self-signed certificates in the Nginx proxy in order to allow encrypted transfer through HTTPS. Please note that the same can be achieved, and is described with all steps, with the docker-compose setup, while the default configuration in that case is HTTP.

Note that web browsers will warn the users about the self-signed certificates, as the identity of the website is not authenticated by a recognized authority. Accessing this installation with a web browser for the first time requires the user to accept the default self-signed certificate.

While this provides some baseline for security, the next important step is to replace the self-signed certificate with a proper, trusted certificate.

For configuration of certificates in enterprise applications, the best practice is to do this in a reverse proxy or load balancer in front of the application: the proxy is responsible for terminating SSL and transparently proxying the request to the target system.

In our docker-compose description (which is also utilized for the AMIs), we provide a configuration for Nginx: here, it is possible to either supply your own SSL certificates or to make use of the ACME protocol for retrieving a certificate generated by Let's Encrypt

When running on AWS, the Elastic Load Balancer (ELB) should be used instead, as it is perfectly integrated into the cloud offering.

Note that it is also possible to enable a HTTPS connector with valid certificates on metaphactory directly (see documentation for more details »).

Hardening of HTTP Security Headers

Besides applying encryption to secure connections, additional measures can be taken to harden connections and protect against additional threats such as Cross-Side Scripting (XSS) or Cross-Site-Request-Forgery (CSRF).

Modern browsers support a broad variety of HTTP headers (which, despite the name, apply also to HTTPS) related to security that protect the user from common attacks, such as cross-site scripting, clickjacking, or data injection.

These headers can be configured on the web server (or in the reverse proxy) and provide an additional layer of security to the user.

The most important ones are listed in the following:

SSL/TLS encryption and Certificates

Certificates are used for authenticating the identity of a website or service, while the underlying Transport Layer Security (TLS) protocol (successor of Secure Socket Layer (SSL)) secures the connection by encrypting the transfer. Note that in this blog post we are not touching upon the use of certificates in Private Key Infrastructures (PKIs).

Signing Certificates

Certificates can either be created and signed locally (self-signed certificates) or by a Certificate Authority (CA). Self-signed certificates (as also used by metaphactory itself when started without further configuration) are not trusted by default, so they need to be added to the application’s trust store.

The authenticity of a certificate is based on a trust model: certificates are signed by a certificate authority (CA) that is globally trusted (either directly or through a chain of intermediate certificates or CA) or by an enterprise certificate authority (CA).

Identity of the Certificate

For certificates to be generally accepted by browsers, it is important that the Subject Alternative Names (SAN) field of the certificate contains the URL(s) used to access metaphactory. When deploying metaphactory on AWS using our AMI, a self-signed certificate is generated using the public host name and IP address of the EC2 instance.

| Name | Description | Default for metaphactory | Reference |

|---|---|---|---|

| Strict Transport Security (HSTS) | Informs the browser that the website and all resources should only be accessed via HTTPS | max-age=31536000; includeSubDomains; preload | read more » |

| Content Security Policy (CSP) | Directs the browser what resources the client is allowed to load and from where. As an example, it allows the administrator to define that all resources can only be fetched from the site's origin. | default-src 'self' 'unsafe-inline'; script-src 'self' 'unsafe-inline' 'unsafe-eval' https://connectors.tableau.com/; img-src 'self' https: data:; | read more » |

| X-Content-Type-Options | The X-Content-Type-Options response HTTP header is a marker used by the server to indicate that the MIME types advertised in the Content-Type headers should be followed and not be changed. The header allows you to avoid MIME type sniffing by saying that the MIME types are deliberately configured. | nosniff | read more » |

Explicit configuration is required when embedding browser-rendered content into metaphactory (e.g., loading pictures from some external source) or content provided by metaphactory into other applications. One such example is accessing metaphactory from the Tableau Web Data Connector (WDC). The Content Security Policy (CSP) header contains a list of all sites which are allowed to embed content or from which content is allowed to be embedded and would need to be extended for additional URLs. Missing policies can be inspected with the developer tools of the browser where warnings will show which content could not be loaded and from which endpoint.

Authentication & authorization using SSO

With a secured connection, we will now have to ensure that only authorized users can access metaphactory.

Employees in the enterprise typically access a plethora of systems in their daily work: working with office documents, email, messaging systems, as well as internal documentation systems, cloud storage, etc.

One big requirement, therefore, is centralized management of identities for all users in an organization. This enables compliance-based user management based on company policies and avoids the need to create users and passwords in a multitude of systems.

A user only has one centrally managed identity and can log into any system within the enterprise using this identity (assuming access is granted to the application) using so-called Single Sign-On (SSO). This means that authentication (proving the user identity) is always handled by the identity provider with a familiar interface. All integrated systems redirect to the identity provider for authentication and are redirected back to the application after successful authentication. The response from the identity provider might include additional properties such as the full name, email address, and other information such as groups.

Access to the systems is typically handled by adding a user to a group managed by the identity provider. This is done by an enterprise administrator. The groups are mapped to roles within metaphactory which define the level of access a user has within the system. Finally, metaphactory enforces granular permissions which are granted to any of the roles a user is assigned via the provided groups.

Connecting metaphactory to a central identity provider can be done in a number of ways. Established protocols are SAML, OAuth, and Open ID Connect (OIDC) with OIDC now being the preferred way of performing Single Sign On (SSO) for metaphactory. OIDC is offered by most cloud providers and also most widely used enterprise identity management solutions offer OIDC. We will revisit OIDC in more detail in another blog post.

To set up SSO, the metaphactory instance needs to be registered with the identity provider with the target URL being the main configuration property. The Authentication Providers documentation describes all configuration properties in detail. An example including additional configuration steps like role mapping will be covered in a later blog post.

Securing database connections at the network level

So far we've looked at the connection from the user to metaphactory, but metaphactory also has to connect to a database to retrieve the data that will be displayed to and can be edited by the user.

For proper end-to-end security, it is important to also secure this connection. In a production environment, metaphactory uses the HTTPS protocol when talking to a database, which already takes care of encrypting the data flow on the network level and hence protects against eavesdropping.

Using HTTPS requires setting up proper certificates in the database and for metaphactory to trust these certificates. Setting up certificates for the database depends on the respective database system and is described in the documentation of your database vendor.

In enterprises, certificates are provided by a company-wide CA, so in order to connect to any system within the company network, metaphactory needs to trust that company root certificate by importing it into its trust store.

The process of importing a certificate into the truststore is described in the Administration FAQ. Please note that when replacing the default truststore with a custom one, the contents of the shipped truststore (provided by the upstream OpenJDK project) should be preserved as it contains the root certificates of widely used certificate authorities which are also used for publicly accessible systems on the internet.

Configuration management with DevOps and GitOps

Modern systems do not work in isolation but rather communicate with many other systems and require a deployment that ensures that all these connections are in place. Deployment processes are often automated and scripted (so-called DevOps processes for development and operations). These scripts and automation steps are often defined in a Git repository (so-called GitOps processes) to track all changes over time for provenance, change management, and the possibility of rollback to any previous version, e.g., in case of misconfigurations or when encountering regressions in a deployment.

Following this process will further improve the security, as all configuration, and especially security relevant configuration as described in the previous sections, can be maintained in code, versioned, audited, and tested before deploying it to production.

Externalized secrets for secure DevOps processes

One big part of the configuration management process is handling credentials for authentication (proving identity) and authorization (defining access permissions) when accessing other systems using service accounts. While all configuration data is stored in config files or scripts, which then reside in a Git repository, credentials are an exception to this rule.

Credentials are sensitive and need to be handled with care to avoid unintended or malicious access to systems. Moreover, credentials are often subject to company policies for compliance with security rules and automated rotation of secrets and certificates.

For this reason, credentials are not contained directly in the respective configuration file, but rather injected into it using placeholders. This allows for defining the configuration, e.g., of a database connection, with all relevant details, but the username and password or similar information required for authentication (including certificates) are kept separate and are injected by replacing variable references which act as placeholders.

Metaphactory provides the Externalized Secrets mechanism for this. An example repository configuration might look like this:

@prefix rep: <http://www.openrdf.org/config/repository#> .

@prefix sail: <http://www.openrdf.org/config/sail#> .

@prefix sr: <http://www.openrdf.org/config/repository/sail#> .

@prefix sparqlr: <http://www.openrdf.org/config/repository/sparql#> .

@prefix rdfs: <http://www.w3.org/2000/01/rdf-schema#> .

@prefix mph: <http://www.metaphacts.com/ontologies/platform/repository#> .

[] a rep:Repository ;

rep:repositoryID “default” ;

rep:repositoryImpl [

rep:repositoryType “metaphactory:SPARQLBasicAuthRepository” ;

sparqlr:query-endpoint <http://mydb.example.com/sparql/> ;

mph:username “${repo.username:myuser}” ;

mph:password “${repo.password}” ;

].

In this example the username and password are specified as variables repo.username and repo.password and the username even has a default value provided.

Providing replacement values for those variables can be done in a number of ways:

- using a properties file,

- through encryption inside a Keepass Password Safe file,

- using environment variables, or

- via additional parameters on the command line when launching metaphactory.

This allows using the best method for the respective deployment scenario. As an example, in a Kubernetes or OpenShift environment, the credentials for the database might be stored as a Secret and injected into the metaphactory container using environment variables. When running metaphactory using our docker-compose setup, one might rather create a Keepass file and store the credentials there. The encryption password for the Keepass file would be passed to metaphactory as command line argument provided in the .env file. Credential injection can be applied to many configurations within metaphactory: Repository (e.g., database) configurations, LDAP servers, Ephedra connections, Git repositories for Git Storage, AWS credentials for S3 Storage, proxy connections to external systems, etc.

Outlook

Security in software deployments has many facets - as depicted in the image below - and one blog post is not enough to cover them all.

A follow-up post will dive deeper into authentication and authorization using single sign-on and also cover role-based access control and assignment of roles based on groups. Organization of roles and different types of permissions are also a part of this story.

Additionally, topics like data security / graph security and the use of native database features to enforce them are highly relevant and will be discussed in following blog posts as well.

Finally, secure software development and deployment processes are essential for software use in enterprises. Keeping up with applying security patches was again being brought to everybody’s attention with the recently discovered Log4j / Log4Shell vulnerability. How this affects software development at metaphacts and the deployment of our software in your environment will also be discussed in another post.